- Vulnerable U

- Posts

- Welcome to the Sloppocalypse

Welcome to the Sloppocalypse

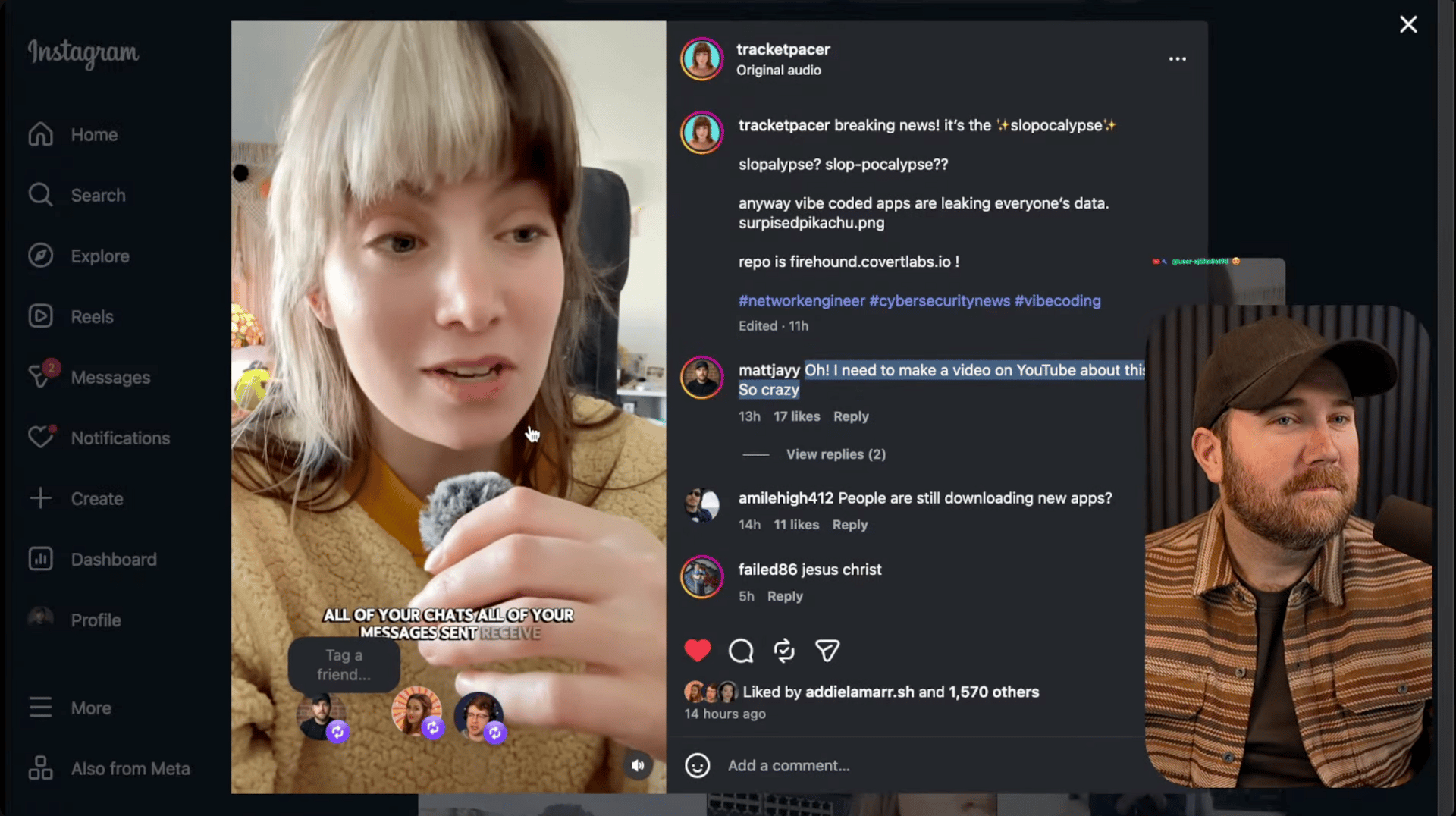

The Sloppocalypse is upon us, as @tracketpacer warned recently: the growing flood of low-quality, AI-assisted software being pushed into production with little understanding of security, privacy, or long-term risk.

The point isn’t that AI is bad. It’s that AI has dramatically lowered the barrier to shipping something—without lowering the bar for what it means to ship responsibly.

In the livestream, I pointed to concrete examples circulating in the community, including datasets like Firehound, which tracks real-world patterns of insecure iOS apps using Firebase backends with dangerously permissive configurations. The takeaway wasn’t who built what, it was how common the pattern has become.

Why It Matters

None of these failures are novel.

Open databases. Weak authentication. Misconfigured permissions. We’ve been here before.

What’s different now—and what @tracketpacer’s “sloppocalypse” framing captures—is volume and velocity.

AI-assisted development makes it trivial to scaffold an app, connect a backend, and publish something that appears polished. When insecure defaults get baked into templates, tutorials, or copy-paste workflows, they don’t fail once. They fail everywhere.

The sloppocalypse isn’t about one bad app. It’s about entire classes of apps shipping the same mistakes simultaneously.

What People Miss

The most dangerous part of this trend isn’t technical sophistication. It’s misplaced trust.

Many of the apps caught up in these patterns are AI chat or assistant-style tools. When users interact with conversational systems, they assume privacy and intent. They share information they would never post publicly—personal details, sensitive conversations, voice notes.

The interface feels private. The backend may be anything but.

This creates a widening gap between how secure systems feel and how they’re actually built. And once users cross that trust threshold, the cost of getting it wrong goes far beyond a misconfiguration.

One Takeaway

The sloppocalypse isn’t an AI problem. It’s a scale problem.

AI didn’t invent insecure defaults. It made them easier to replicate and faster to deploy. And when inexperienced builders ship production systems without understanding the implications, security debt accumulates immediately.

Democratizing creation is mostly a good thing, but creation without guardrails isn’t neutral. Once real users are involved, security stops being optional, and trust becomes something you can lose faster than you ever earned it.