- Vulnerable U

- Posts

- When the Cyber Chief Becomes the Cautionary Tale: What a Public ChatGPT Upload Teaches Security Teams

When the Cyber Chief Becomes the Cautionary Tale: What a Public ChatGPT Upload Teaches Security Teams

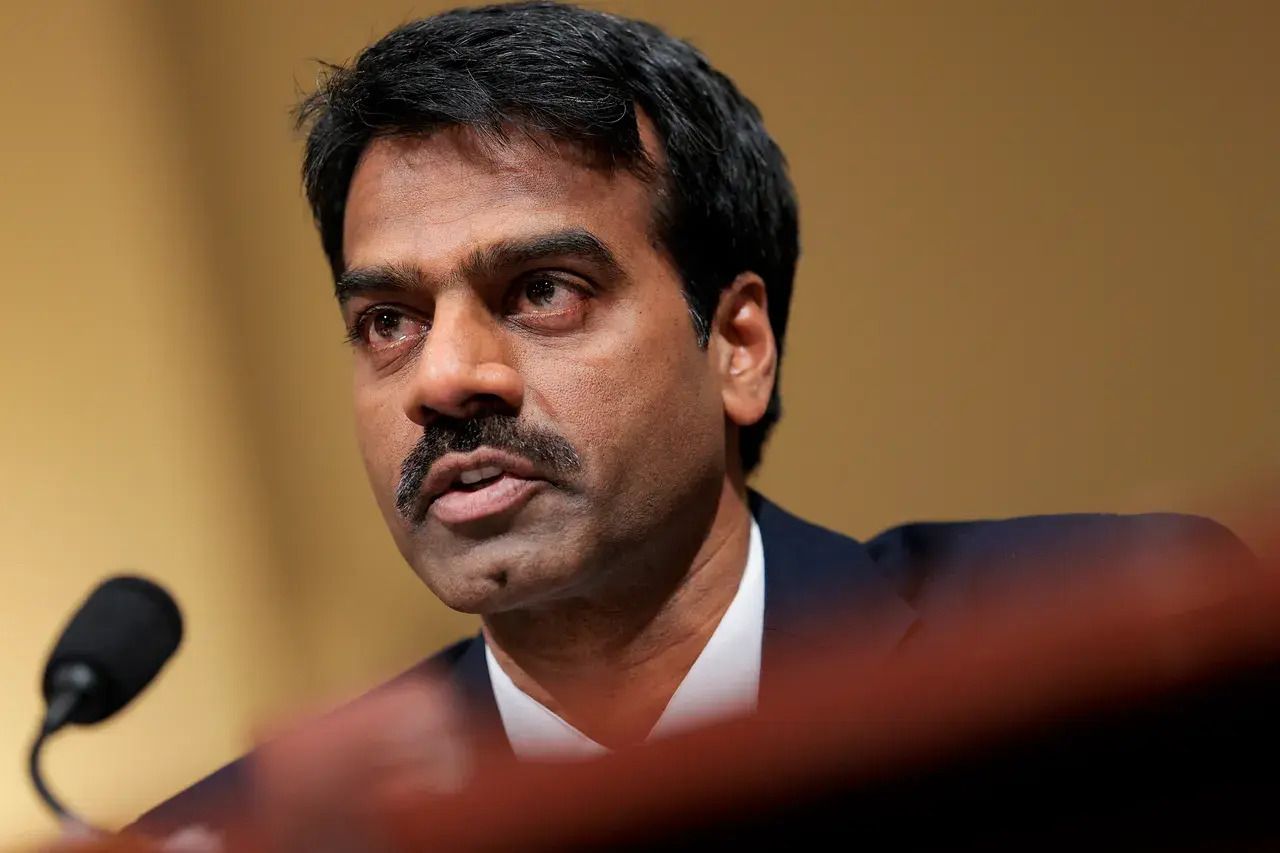

In the year of AI-infused workflows, one of the nation’s top cybersecurity officials became a reminder that people drive risk. In 2025, Acting CISA Director Madhu Gottumukkala uploaded sensitive, “for official use only” government contracting documents into a public version of ChatGPT, triggering internal alerts and a Department of Homeland Security-level review of potential data exposure.

At first glance, this may sound like bureaucratic drama, a headline for the political pages. But the official involved had explicitly requested special permission to use ChatGPT in an environment where AI tools were otherwise blocked for most employees due to data sensitivity concerns. That exception, granted early in his tenure, created a blind spot that allowed sensitive material to land outside controlled systems.

Public AI models like ChatGPT are trained on user inputs and may retain elements of that data in future interactions. Uploading internal government documents, even if unclassified, into a system accessible by hundreds of millions of users worldwide creates a vector for leakage that conventional data protection tools weren’t designed to stop. This isn’t hypothetical; the alerts triggered by the agency’s cybersecurity sensors make that clear.

The incident also underscores a broader organizational truth: governance, policy, and training must evolve faster than the tools people want to adopt. Enterprises have been wrestling with shadow IT, BYOD, and consumer cloud services for over a decade. Generative AI adds a qualitatively different variable. Without explicit guardrails and enforcement mechanisms, people with legitimate access can inadvertently turn powerful tools into vectors for exposure.

For security leaders, this should be a clarion call to action. First, review existing AI policies, especially those involving public, internet-facing models, and ensure they’re not just advisory but enforceable. Add specific controls that prevent sensitive workloads from interacting with external AI endpoints unless they run within approved, private, or on-prem environments. Second, pair policy with real-time monitoring that can detect anomalous uploads or model interactions, because reliance on good behavior alone won’t hold at scale.

Finally, foster cultural practices that bring AI risk into everyday security conversations. If even agency chiefs with seasoned careers can make these mistakes, nobody in your organization is immune. Security teams must integrate AI risk into training, tabletop exercises, and incident response playbooks now, not later. Because as this episode illustrates, the biggest risk isn’t the AI technology — it’s how humans use it without pause.